Vanishing Gradient is a big problem in RNNs and to solve this, we use a special kind of RNN called the LSTM layer.

What is a LSTM? Here's what it is.

What are LSTMs?

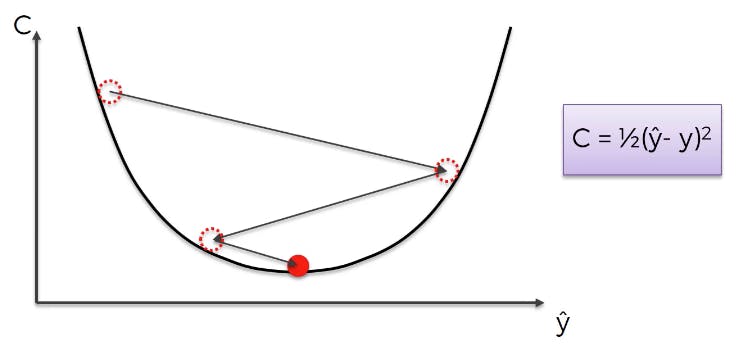

LSTMs were made to solve the vanishing gradient problem. To understand LSTMs better, we need to understand vanishing gradients properly. Vanishing gradient problem is related to the gradient descent algorithms in deep learning. To recall this is how gradient descent looks like.

In Deep Learning, gradient descent algorithm is combined with the back-propagation algorithm to fine-tune the hyperparameters and weights in the neural network.

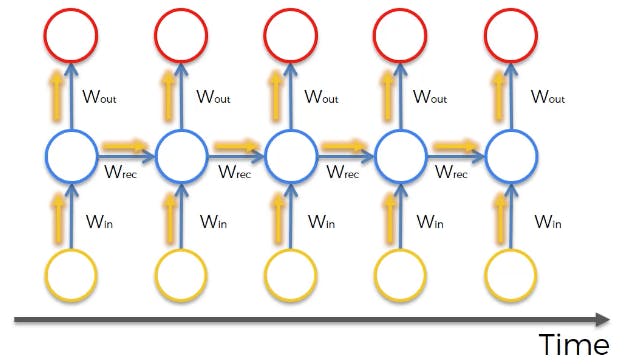

RNNs behave slightly differently, as you know that RNNs feed their output to themselves, through time.

Cost Function is calculated in the neural network at each time-step and as it starts to get deep into the network, the changes made to the weights is "diluted".

Gradient Descent being multiplicative in nature, multiplies the weight obtained from deep in the neural network to all other layers. Some weights that are small start to vanish.

How does LSTM help solve this?

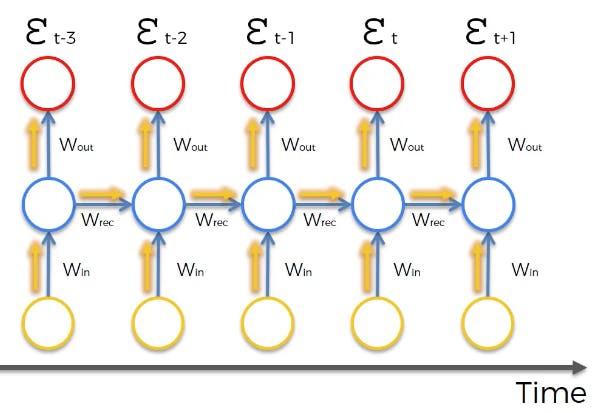

The actual factor that is multiplied to the layers through back-propagation is called W_rec.

When W_rec < 1 --> Vanishing Gradient,

When W_rec > 1 --> Exploding Gradient.

An LSTM just sets W_rec = 1.

This is how LSTMs tackle the vanishing gradient. There is obviously more to LSTMs. More on that in coming threads.

This was the description of LSTMs from the perspective of Vanishing Gradient.

Thank you for reading this far. Hope you enjoyed :) Please consider following Me for more amazing stuff.